Unsanctioned AI tools. Patchy access controls. Unmanaged apps and devices. And of course, compromised credentials. These are the issues revealed in the 1Password Annual Report 2025: The Access-Trust Gap.

The report is based on a survey of over 5,000 knowledge workers, IT and security professionals, and CISOs, and it captures a moment of profound technological and cultural transition. Companies are still playing catch-up to the last few years of change: the rise of hybrid work, the SaaS explosion, the blurred lines between work and personal devices, and AI. IT and security teams are discovering that their go-to tools for securing identities and managing access, such as SSO and MDM, weren’t designed for this world.

The result is a widening Access-Trust Gap: the divide between the types of access that security and IT teams can control, and the reality of how people (and now AI agents) access sensitive data in practice.

The survey data reveals four areas where the Access-Trust Gap is widest and where unsanctioned access poses the greatest threat:

- AI-based tools

- SaaS apps

- Credentials

- Devices

In this blog, we’ll address the first section of the report, on generative AI tools. We’ll walk through some of the report’s most eye-opening findings and how IT and security teams can translate them into actionable priorities.

We’ll also explore how 1Password helps close these gaps via 1Password Extended Access Management, a suite of solutions that includes our Enterprise Password Manager, Trelica by 1Password, and 1Password Device Trust.

AI use is high, but policy compliance is low

It should come as no surprise that enthusiasm for generative AI is high, as leaders and workers experiment with AI-based tools to improve productivity and unlock competitive advantages. However, many companies lack clearly defined AI policies, much less the technological guardrails to enforce them.

“I know we’ve got data going into these LLMs that we don’t have control over. The best we can do is sign enterprise agreements that offer some legal protections, but if someone uses a tool we don’t have an agreement for, there’s no protection for us.” Nick Tripp, CISO, Duke University

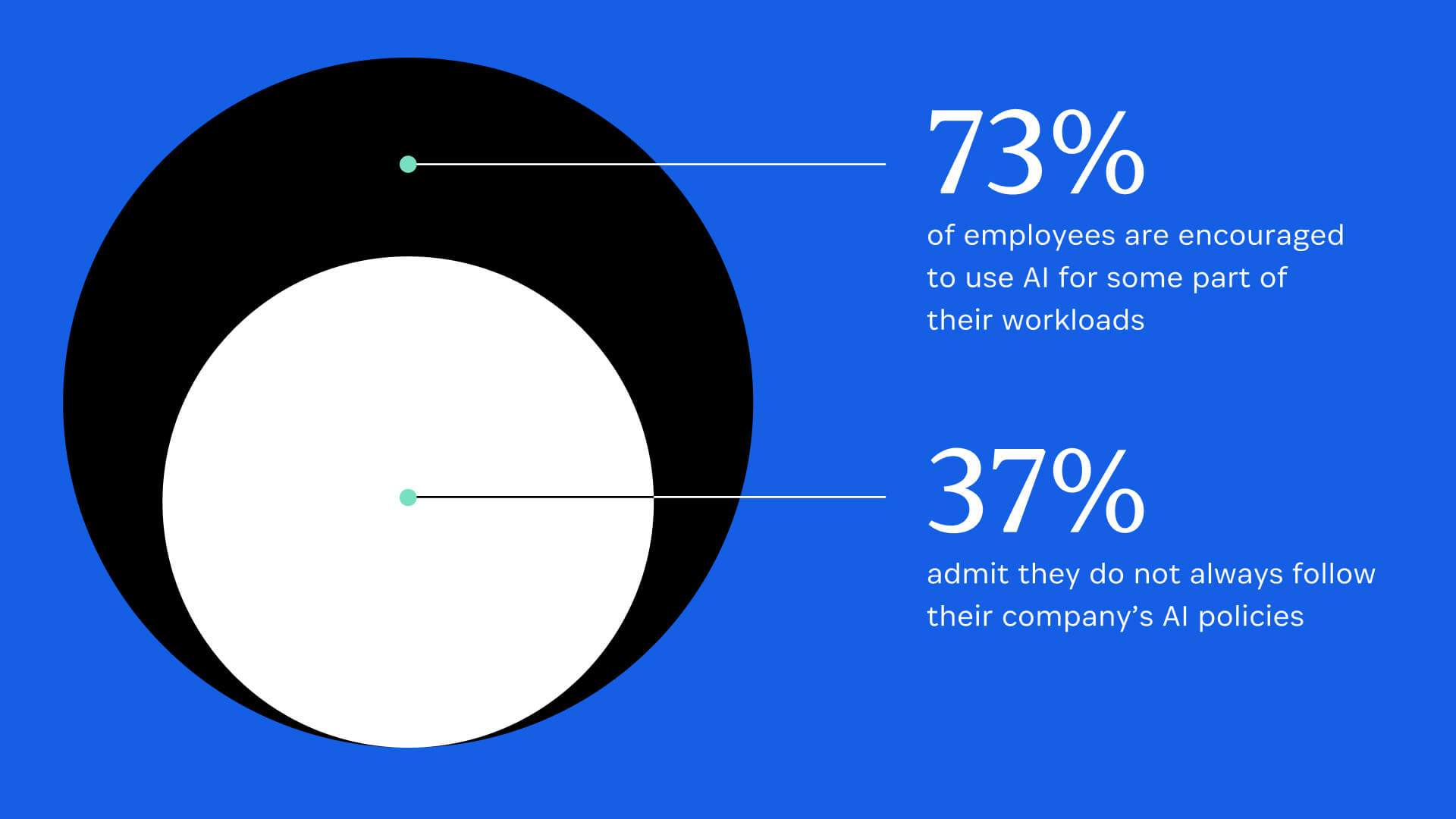

AI governance statistics from the report

- 73% of employees are encouraged to use AI for some part of their workloads, but 37% admit they do not always follow their company’s AI policies.

- 27% of employees have worked on AI-based applications that their employers did not approve

- 16% of non-IT/security employees believe their company does not have a generative AI policy

- Only 6% of IT and security professionals believe their company lacks an AI policy, suggesting that there is a gap in awareness and communication between them and less technical employees

It’s worth pausing on the revelation that 37% of employees knowingly and consciously choose not to follow AI policy. This suggests a lack of both technological controls to enforce AI usage policies and a lack of education to convince employees that the risks of unsanctioned AI outweigh any perceived rewards. Any attempt to implement proper governance of AI-based tools must address both shortfalls.

Imperative: AI governance

Companies have a clear interest in employees only using approved AI-based tools for work tasks, especially if those tasks involve processing sensitive customer, employee, or internal data. Unsanctioned AI tools can absorb sensitive data into training models, generate harmful outputs, or even function as outright malware. In any case, shadow AI poses a threat to compliance, security, and auditability.

With that in mind, IT’s priorities for AI governance should include the following:

- Maintain a complete inventory of AI tools in use at your organization and conduct regular audits.

- Establish clear policies, enforce appropriate AI usage, and guide users toward safe tools and behaviors.

- Invest in controls to ensure only company-sanctioned AI tools can access company data.

How 1Password helps close the Access-Trust Gap for AI

Closing the Access-Trust Gap for AI means ensuring that employees can access the tools they need to be productive, but putting guardrails in place to stop unsanctioned and dangerous AI. 1Password is committed to helping companies embrace AI without sacrificing security, and our security principles are reflected in each solution of Extended Access Management.

With that in mind, let’s go through each of the priorities listed above and discuss how 1Password helps to address them.

Maintain a complete inventory of AI tools in use at your organization and conduct regular audits

Trelica by 1Password is a SaaS management platform that enables IT teams to discover every SaaS tool in their organization. It enables continuous discovery, provides risk and compliance profiles for both managed and unmanaged SaaS applications, and lets IT admins customize alerts and define automated actions based on specific criteria.

Invest in controls to ensure only company-sanctioned AI tools can access company data

Employees are incentivized to use whatever tools make them most productive, and Trelica by 1Password securely facilitates this via a self-serve app hub, so they can easily access approved, sanctioned AI tools rather than seeking out shadow AI.

Meanwhile, 1Password Device Trust helps communicate and enforce AI policies. Admins can create a Check that scans employee devices for unsanctioned AI tools and prevents the device from authenticating to managed apps until the blocklisted tool is uninstalled.

Establish clear policies, enforce appropriate AI usage, and guide users toward safe tools and behaviors

Crucially, when Device Trust blocks a user from authenticating, it explains the rationale for these decisions in plain language, ensuring employees are continually educated about their company’s security policies. For example, if the device trust agent detects an employee using their personal ChatGPT account, it blocks them and directs them to the company-sanctioned workspace.

Invest in controls to ensure only company-sanctioned AI tools can access company data

Employees are incentivized to use whatever tools make them most productive, and Trelica by 1Password securely facilitates this via a self-serve app hub, so they can easily access approved, sanctioned AI tools rather than seeking out shadow AI.

Meanwhile, 1Password Device Trust helps communicate and enforce AI policies. Admins can create a Check that scans employee devices for unsanctioned AI tools and prevents the device from authenticating to managed apps until the blocklisted tool is uninstalled.

Establish clear policies, enforce appropriate AI usage, and guide users toward safe tools and behaviors

Crucially, when Device Trust blocks a user from authenticating, it explains the rationale for these decisions in plain language, ensuring employees are continually educated about their company’s security policies. For example, if the device trust agent detects an employee using their personal ChatGPT account, it blocks them and directs them to the company-sanctioned workspace.

Close your Access-Trust Gap with 1Password

The rate of technological transformation isn’t slowing down anytime soon, which means there will never be a better time to assess your own organization’s Access-Trust Gap and start closing it.

You can also click here to read the full Access-Trust Gap report.

To learn more about how 1Password can help you secure your business without slowing you down, reach out to us today.

by Elaine Atwell on

by Elaine Atwell on