We recently shared that we’ll soon be rolling out a privacy-preserving telemetry system that will help us improve 1Password by leveraging aggregated, de-identified usage data. Here we’ll share technical details about how this system works and the steps we’ve taken to protect customer privacy while engaging with the resulting data.

Our goal is to understand more about how our growing customer base – not specific individuals – are using 1Password. Our intent is to pinpoint where and how we need to improve our products by studying and drawing insights from aggregate usage patterns.

While this is our goal, we are also 100% committed to our privacy and security standards and our technical architecture is designed to align with our core privacy principles:

The passwords, credit card numbers, URLs, and other data that you save in your 1Password vaults is end-to-end encrypted using secrets that only you know. 1Password’s zero-knowledge architecture will remain unchanged. Our telemetry system cannot, by design, have insight into the end-to-end encrypted data you store in 1Password. That information is owned by and known only to you.

We will only collect what is needed to provide our service and build a better 1Password experience. We’ve designed our telemetry system so it only collects what we truly need and nothing else. You can learn more about this approach in our previous blog post.

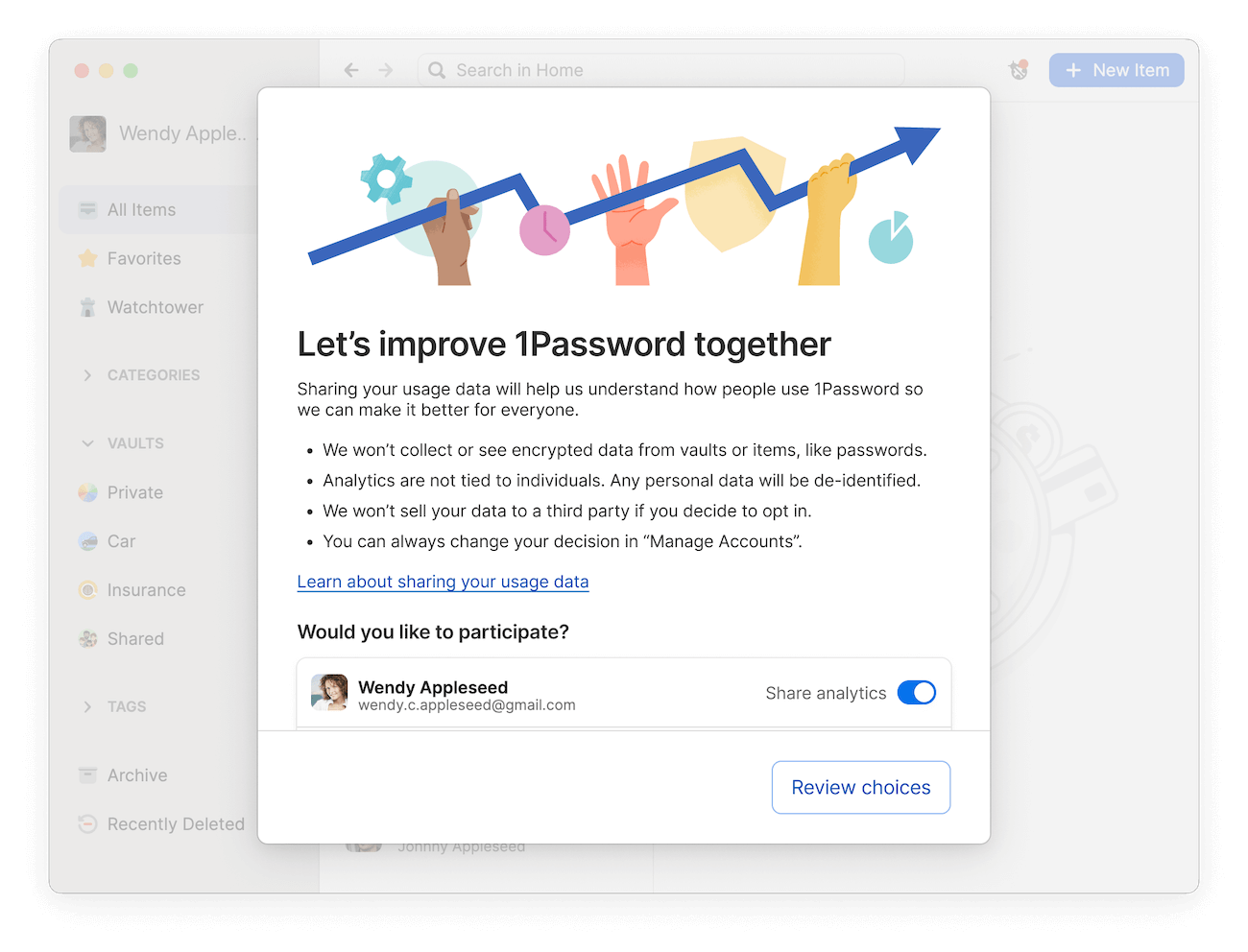

We won’t collect product usage telemetry data without your awareness and consent. That’s why you’ll see the following in-app prompt when the rollout reaches your Individual or Family account, letting you choose whether you would like to participate:

You can change these preferences at any time in “Manage Account”.

How we’re doing data collection

The telemetry system we’re adding to 1Password, which has been developed by our internal teams and vetted by our security and privacy experts, is built to collect “metrics” and “events”.

An event represents an action or moment that occurred inside a 1Password app. For example, an event could be “unlocking 1Password”. A metric is a measurement calculated client-side (to maintain user privacy) using multiple events, such as the time between when a 1Password invitation is accepted and when the subsequent profile is set up.

Everything starts with the consent module shared above, which will be rolled out in phases. The choice you make will be synced back to our systems. This means you only need to make this choice once for an account, and it will be synced across every 1Password app that account is signed in to. You will be able to change this setting at any time.

Our telemetry system won’t disrupt your workflows.

Our privacy-preserving telemetry system will operate in the background without changing your daily experience with 1Password. We want to make sure we’re not getting in your way. So our telemetry system won’t block you from using 1Password or disrupt your workflows.

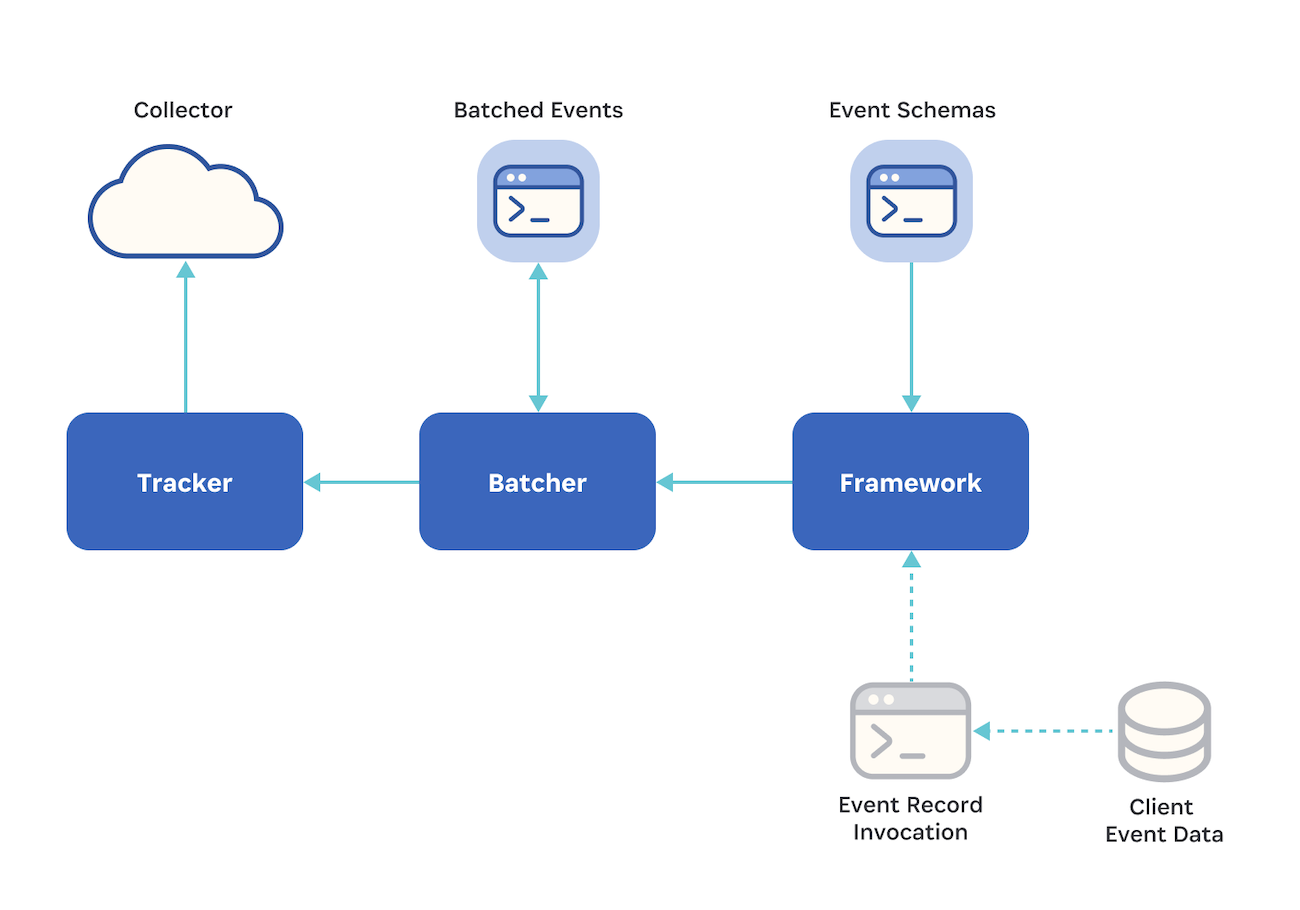

Behind the scenes, 1Password will fire an event when one of a predefined set of actions occurs. Depending on the app and its offline operations, the event may be sent on to an intermediary layer called the batcher, which serves to reduce network calls. Once a set amount of time or number of events is reached, this layer will send a batch of events on to the tracker.

The tracker is responsible for converting that batch of events into the appropriate protocol, attaching any necessary metadata to contextualize the events, and sending it to the collector, which we’ll unpack in a moment. This is the point at which the data transitions from the customer’s app to our infrastructure.

Collection architecture

Event data is stored in a collector hosted entirely within our own environment for security and privacy purposes. For this aspect of our collection architecture, we host an open-source data platform built by Snowplow.

All of the tools we use for event collection have been customized by our engineering teams. In some cases we’ve modified and adapted Snowplow to better align with our requirements and platforms. In other cases, we’ve developed entirely new code from scratch. In both scenarios, our goal has been to ensure that we maintain complete control over the data we collect and how we handle it.

We are committed to collecting only the minimum data necessary and not sharing this raw data outside of our infrastructure.

Raw events and storage

The event data in the collector is considered “raw”. It contains all the data and metadata that was originally collected from the app. Let’s look at an example for an app_unlock event:

{

"app_id": "1",

"platform": "mob",

"etl_tstamp": "2023-07-04T20:58:44.903Z",

"collector_tstamp": "2023-07-04T20:58:44.299Z",

"dvce_created_tstamp": "2023-07-04T16:30:18.018Z",

"event": "unstruct",

"event_id": "#########################",

"name_tracker": "core",

"v_tracker": "rust-fork-0.1.0",

"v_collector": "ssc-2.9.0-kinesis",

"v_etl": "snowplow-enrich-kinesis-3.8.0",

"user_ipaddress": "##.###.###.###",

"network_userid": "#########################",

"contexts_com_1password_core_app_context_2": [

{

"version": "8.10.7",

"name": "1Password for iOS"

}

],

"contexts_com_1password_core_account_context_1": [

{

"account_uuid": "#########################",

"account_type": "B",

"billing_status": "A",

"user_uuid": "#########################",

}

],

"contexts_com_1password_core_device_context_1": [

{

"device_uuid": "#########################",

"os_name": "iOS",

"os_version": "16.5.1"

}

],

"unstruct_event_com_1password_app_app_unlock_successful_1": {

"unlock_method": "PASSWORD"

},

"dvce_sent_tstamp": "2023-07-04T20:58:43.581Z",

"derived_tstamp": "2023-07-04T16:30:18.736Z",

"event_vendor": "com.1password.app",

"event_name": "app_unlock_successful",

"event_format": "jsonschema",

"event_version": "1-0-0"

}

Raw events exist within a “black box” for us. They are stored in an S3 bucket that our team members do not analyze. Instead, this component of the infrastructure is highly restricted – all of our validations and subsequent steps in the data pipeline are automated. In fact, all components of this system have limited access, maintained with RBAC under the principle of least privilege.

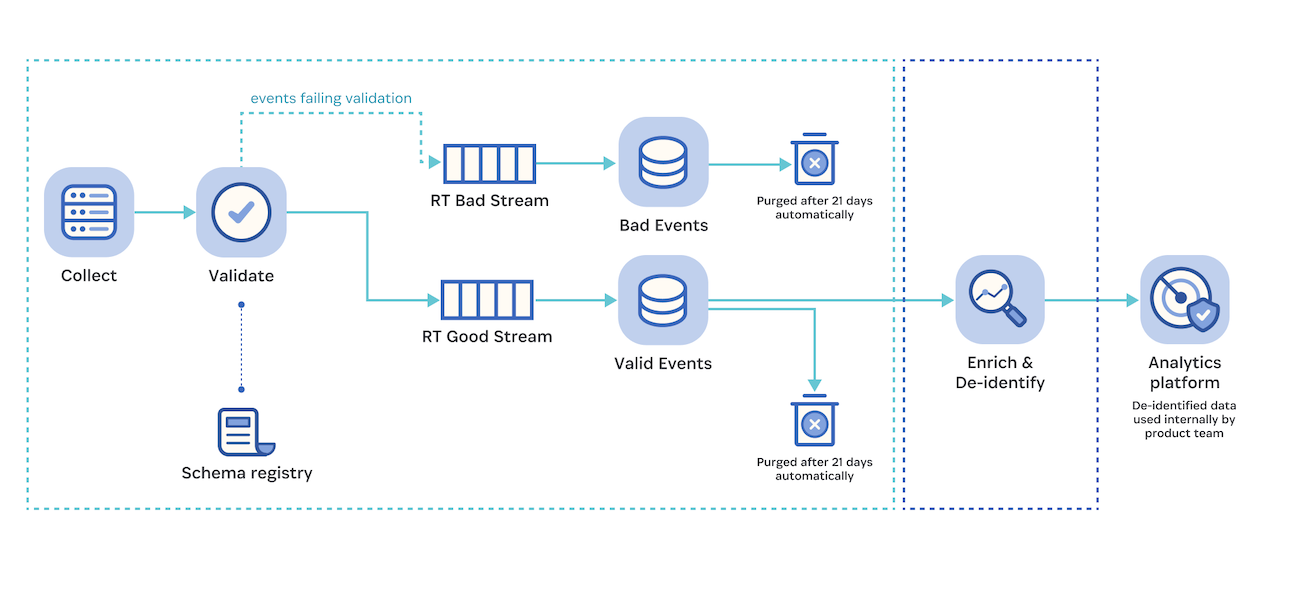

Raw events are validated against data schemas developed by 1Password to ensure they’re in a format our pipeline can process. If an event does not conform to our data schemas, it’s labeled as a “bad event.” All raw events and associated data, whether they pass validation or not, are purged after 21 days.

Events that pass data schema validation proceed through our pipeline, where they undergo a series of transformations to be enriched and de-identified.

Enrichment and de-identification

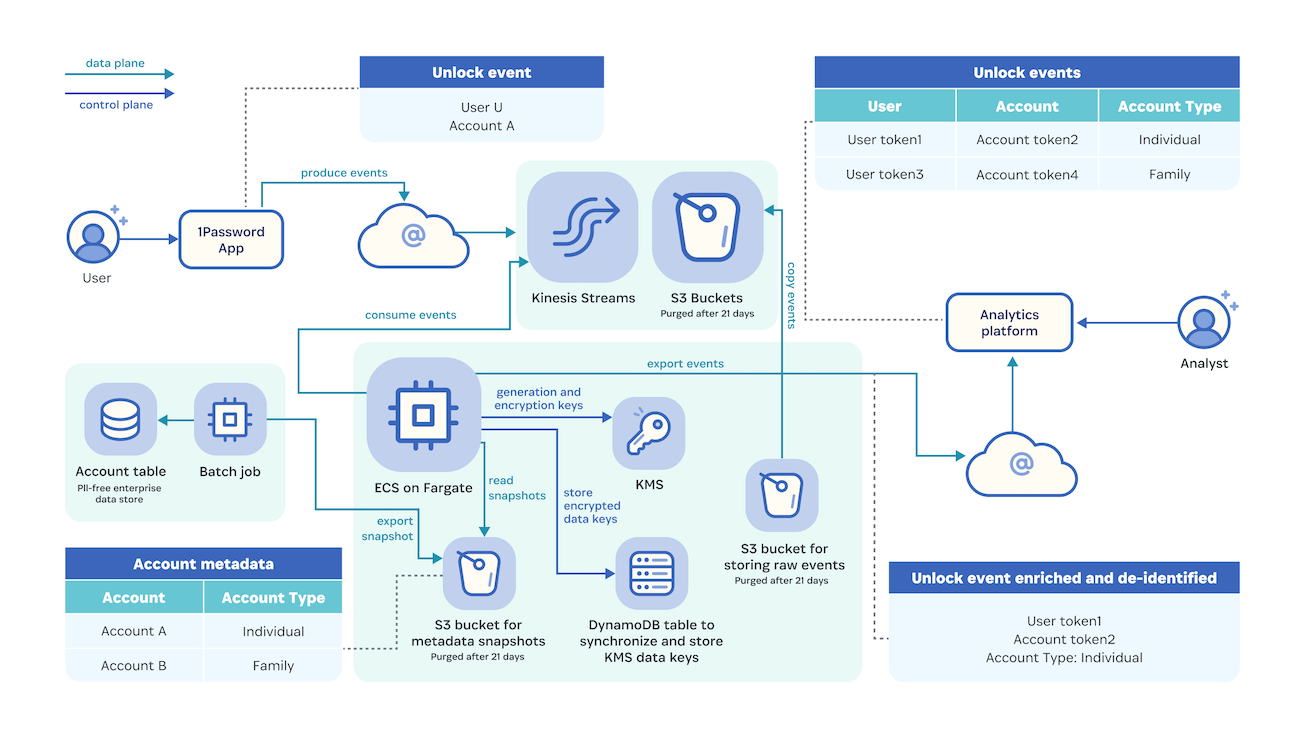

Valid events land in an AWS account dedicated to the enrichment and de-identification processes.

The enrichment process adds meaningful metadata to events that we can’t pick up at the time an event is fired, as the 1Password apps hold limited information that would serve to contextualize this data, which is stored elsewhere as service data.

This enriched metadata is defined and based on specific event types, and can include information such as the account type and if the trial period is active.

After the enrichment process is done, we de-identify values from selected fields in the Snowplow schema. A number of strategies are employed here, such as cryptographically hashing (with HMAC-SHA256) any UUIDs collected. That cryptographic secret is generated and rotated on a quarterly basis using an AWS Lambda function. Once enriched and de-identified, the raw data is dropped, and only the de-identified events are moved forward in the pipeline.

De-identification details

To improve 1Password, we don’t need precise timestamps of actions, specific account information, IP addresses associated with 1Password apps, or other sensitive data points. Aggregated, actionable data is our goal, as this will allow us to derive meaningful insights at scale. So before we analyze events, we aim to reduce the possibility of reidentifying individual users or having more information than necessary.

Here are some elements we will be de-identifying and the methods we’ll be using to achieve that:

| Element | Method |

|---|---|

| Event timestamps | Truncated to the hour |

| User UUID, account UUID, and device UUID | Hashed cryptographically with HMAC-SHA256. Keys are rotated on a quarterly basis |

| Account size | Bucketed. For example, categorized as 0-5 users and 6+ users on a Family account |

| IP address | Dropped |

But why collect these data points in the first place?

You might be wondering: Okay, you’re de-identifying some of the values associated with each data event. But why collect that information in the first place?

Let’s consider the example of a timestamp. The timestamp in the raw event records when an action occurred, providing valuable insights into behavior patterns. But we don’t need to know the exact minute, second or millisecond the event took place. That level of granularity could potentially identify individuals if combined with enough data points.

So we will truncate that timestamp to the hour, rendering it statistically useless in any de-identification attack. That way, we’ll only know that the associated action was performed around a certain time. When combined with other events, we’ll still be able to learn a lot from this de-identified information. It’s the best of both worlds – valuable insights while protecting customer privacy.

Similarly, the identity of the user who performed an action isn’t necessary. That’s why we’ll use a de-identification pipeline to generate a unique token, refreshed every quarter, to prevent de-identification attacks relying on accumulation of dimensions or state changes. This will allow us to view usage patterns and derive insights without being able to identify individual users.

To maintain and protect customer privacy, we’ll be rotating the keys for this hashing on a quarterly basis. Once the keys are rotated everyone will become a totally new person in our system – their hashed UUID will be different, and the one they had before will be gone.

Analyzing de-identified data

After we’ve taken the measures outlined above to protect individual privacy, the enriched and de-identified data will be streamed to an analytics platform. For this portion of the pipeline, we’ve chosen an open-source platform built by PostHog, which was evaluated by our security and privacy team. As with other aspects of our pipeline, we’ve adapted the platform to our needs rather than sticking with what’s available “out-of-the-box”. Most importantly, as described above, we handle data collection separately from the platform.

Once the data is in PostHog, our product analytics team will build reports and conduct analyses designed to answer pre-defined business questions. For example, we may want to understand how customers are using our import functionality so we can focus optimizations - one-click imports - in the most impactful areas. In the future, we may share details about the insights we’ve gleaned from the telemetry data set, and how these have helped us make product improvements.

We’ve thought critically about who on our team will need to perform these analyses and have restricted everyone else’s access to our analytics platform.

Conclusion

From the outset, we’ve built our telemetry system with privacy and security in mind. As reflected in our de-identification design, we continue to prioritize customer privacy over more granular data and insights.

As always, thank you for your continued trust and support. We don’t take it for granted and wouldn’t be where we are today without you.

If you have any questions or thoughts about this, please reach out and let us know.

by Hal Ali on

by Hal Ali on

Tweet about this post