Zero Trust is having a bit of a moment.

Okta’s 2023 State of Zero Trust report found that 61% of organizations globally have a defined Zero Trust initiative in place. (That’s up from only 16% of companies in 2018).

Yet even as Zero Trust security reaches new heights of popularity (including via executive order), a backlash is brewing among professionals, who feel the term is being diluted past all usefulness.

At security conferences, endless lines of vendors hawk products, all dubiously labeled “ZTA.” Companies crow about their Zero Trust initiatives while privately making as many exceptions as there are rules. Charlie Winckless, senior director analyst for Gartner, puts it this way: “It’s important that organizations look at the capability and not the buzzword that’s wrapped around it.”

So which is it – is Zero Trust our best hope against a lawless security landscape, or is it just another disposable tech buzzword?

The answer is: a little bit of both. And that begs another question: how did one term come to mean so many things to so many different people?

In this article, we’ll trace this idea’s 20-year history and show how it has changed along the way.

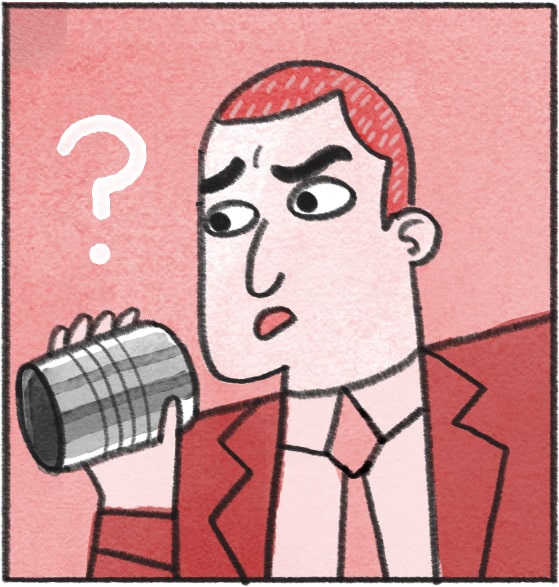

What is Zero Trust?

Before we get into Zero Trust’s origin story back in the 2000s, let’s get on the same page about what Zero Trust means in the 2020s. In a nutshell, Zero Trust is an approach to security that is designed to operate without a clear network perimeter (such as today’s SaaS-based, hybrid work model), and that continuously seeks to verify the identity and trustworthiness of all actions, and restrict access to sensitive resources.

The 2000s: de-perimeterization and black core

The beginnings of the Zero Trust paradigm–starting before it found its now-familiar name – didn’t occur in a purely linear fashion, where one idea was neatly built upon another. Instead, multiple security professionals came up with similar ideas at roughly the same time. That confluence isn’t surprising, since everyone in the security world was responding to the same trends, which were rendering traditional, network-based security obsolete.

Prior to the 2000s, the standard security model relied on a hardened perimeter around a corporate intranet. Access to the network was protected by firewalls and a single log-in; but once someone was inside, they were essentially treated as trusted. This approach – sometimes called castle-and-moat or M&M (like the candies) – made sense when work was contained by a physical office building and on-prem servers.

But in the 2000s, all that changed. Reliable home internet and public wifi eroded the physical perimeters around work. Employees, contractors, and partners needed a way to access company data from anywhere. To be clear, this didn’t immediately give rise to Zero Trust; instead, many organizations relied on corporate VPNs, which allowed for secure tunnels into their networks.

VPNs helped companies keep their employees' sessions from being hijacked every time they went to a Starbucks. Still, if a VPN was compromised, so was the entire network. Gradually, the idea of a “hardened perimeter” started to look less and less feasible. In 2005, the Department of Defense proposed transitioning to a “black core” architecture that focused on securing individual transactions via end-to-end encryption.

It’s unsurprising that an organization as vast as the military came up with black core (later called colorless core) since they were racing to create a Global Information Grid (GIG) in order to “integrate virtually all of the information systems, services, and applications in the US Department of Defense (DoD) into one seamless, reliable, and secure network.” A network that large could not depend on a single perimeter and needed an approach to security that allowed for mobility and interoperability.

Another major mid-aughts development was a wave of devastating viruses like Blaster and SoBig hitting organizations from the University of Florida to Lockheed Martin. These worms evaded firewalls and proliferated wildly within networks.

As Paul Simmonds said in his seminal 2004 presentation: “We are losing the war on good security.”

Simmonds proposed a framework called “de-perimeterization,” which he also called “defense in depth.” De-perimeterization was an early forebear of Zero Trust (and still a better name for it, IMO). Some of the things Simmonds called for–such as cross-enterprise trust and authentication and better standards for data classification–are still parts of ZT today. However, in 2004, Simmonds still couldn’t imagine a non-VPN approach to creating secure connections, much less the dominance of individually-gated cloud apps.

Forrester and the birth of the Zero Trust model

The term “Zero Trust Model” didn’t appear on the scene until 2009, when it was coined by Forrester’s John Kindervag. Kindervag’s landmark report introduces three core concepts of the Zero Trust Model:

Ensure all resources are accessed securely regardless of location. This demands the same level of encryption and protection for data moving within a network as for external data.

Adopt a least privilege strategy and strictly enforce access control. The idea of “least privilege” – that people can access only the data they need to do their jobs – predates Zero Trust, but is nevertheless part of its foundation. Role-based access control – people being given access to resources based on their role – is one way to put least privilege into action, though Kindervag doesn’t claim it’s the only solution.

Inspect and log all traffic. In Zero Trust architecture, it’s not enough to establish trust once a user has verified their identity. Instead, Kindervag argues, “By continuously inspecting network traffic, security pros can identify anomalous user behavior or suspicious user activity (e.g., a user performing large downloads or frequently accessing systems or records he normally doesn’t need to for his day-to-day responsibilities).”

Each of these concepts has survived in some form in our current understanding of Zero Trust principles, although each has evolved. For instance, the concept of “continuous authentication,” or even multi-factor authentication, is missing here–in fact, the word “authentication” only appears once, in a footnote.

“Microperimeters” and the role of the insider threat

The 2010 Forrester report was a landmark document, but it introduced two ideas that continue to cause tension and confusion in the Zero Trust world to this day.

The first issue is how Kindervag’s ideal Zero Trust Model relates to the corporate network and its perimeter. For all that Kindervag embraces de-perimeterization and states that “the perimeter no longer exists,” this report can’t quite abandon the network model and continually refers to threats being either “internal” or “external.”

Kindervag also recommends that security teams “segment your networks into microperimeters where you can granularly restrict access, apply additional security controls, and closely monitor network traffic…” This reintroduction of the perimeter opens the door for vendors to chip away at one of the core ideas of Zero Trust. While using microperimeters/ microsegmentation is one model for ZTA, firewalls and VPNs are explicitly not a part of the paradigm, yet many vendors attempt to use this idea to jump on the bandwagon.

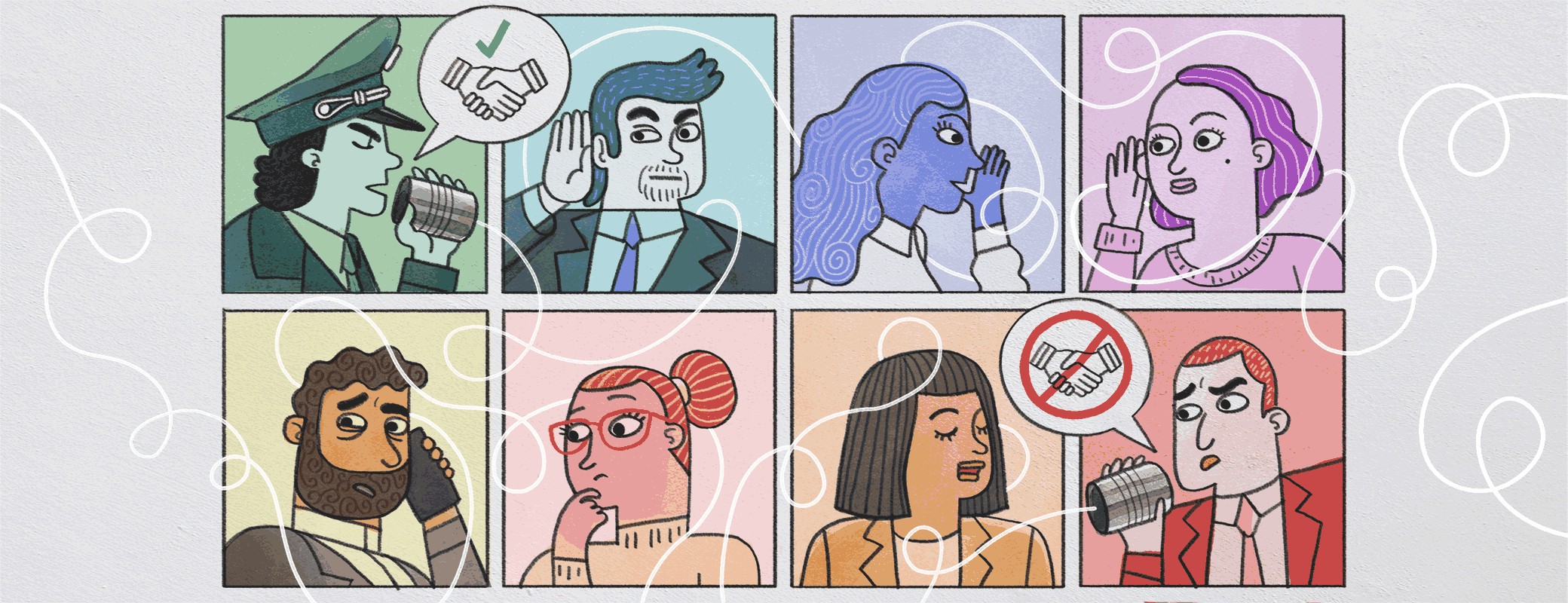

The Forrester report also establishes another defining Zero Trust trait: (over)emphasizing the danger of malicious insiders. The report opens with a long anecdote about Russian spies and goes on to cite Chelsea Manning and Edward Snowden as evidence that bad actors within an organization are your single biggest concern.

But while internal threats are indeed serious, this report glibly conflates three extremely different types of breaches:

Third parties impersonating insiders via stolen credentials or hardware

Employee error, whether through carelessness or ignorance

Deliberate employee malfeasance

In the quote below, you can see that conflation in action where “internal incident” is transformed into the much darker “malicious activities.”

“How serious is the threat? Well, according to Forrester’s Global Business Technographics® Security Survey, 2015, 52% of network security decision-makers who had experienced a breach reported that it was a result of an internal incident, whether it was within the organization or the organization of a business partner or third-party supplier. (see endnote 15) Insiders have much easier access to critical systems and can often go about their malicious activities without raising any red flags.”

This focus on malicious insiders is responsible for some of the worst excesses of so-called Zero Trust security technology, which could be better described as bossware.

Google’s BeyondCorp makes Zero Trust mainstream

In 2014, Google introduced its BeyondCorp initiative, which is widely credited with transforming Zero Trust from “a neat but impractical idea” to “an urgent mandate.” Though the words “Zero Trust” never appear in Google’s announcement – it’s not clear if they were trying to replace the term or had simply never heard of it – BeyondCorp clearly marked an evolution of ZTA.

By 2014, the business world was embracing the cloud revolution, and cloud-based SaaS apps were taking over. Because of that, BeyondCorp was the first version of Zero Trust that could imagine a truly perimeterless world. Its authors announced that “We are removing the requirement for a privileged intranet and moving our corporate applications to the Internet.”

“All access to enterprise resources is fully authenticated, fully authorized, and fully encrypted based upon device state and user credentials. We can enforce fine-grained access to different parts of enterprise resources. As a result, all Google employees can work successfully from any network, and without the need for a traditional VPN connection into the privileged network.”

BeyondCorp had an undeniable influence on security writ large, but not all of its ideas survived in later versions of Zero Trust.

Device trust and BYOD

Nearly every report on ZTA mentions that, in order for the entire concept to work, devices must be in a secure state. In other words, strong authentication, RBAC, and encryption won’t protect your resources from a device that’s infected with malware.

Despite the consensus that device trust is crucial to Zero Trust, few writers include suggestions for how to actually ensure devices are in a secure state. In fact, many writers and vendors claim that Zero Trust can facilitate BYOD policies and reduce the need for endpoint management.

In this report, Google explicitly rejects the idea that BYOD can be compatible with BeyondCorp. Instead, they emphasize that “only managed devices can access corporate applications.” Google issues unique certificates identifying the device and “only a device deemed sufficiently secure can be classed as a managed device.” That’s obviously a little light on details, but still goes farther than many other, more recent, Zero Trust guides in sketching out an actual process for ensuring device trust.

To be clear, Zero Trust solutions can help enforce a strict enough BYOD policy — but they won’t solve for completely unmanaged devices being allowed into systems.

NIST provides a Zero Trust how-to

At present, the most comprehensive guide to the current Zero Trust security model comes to us from the National Institute of Standards and Technology (NIST).

Rather than proposing a single “right way” to practice Zero Trust, the 2020 report encompasses the breadth of its possibilities, including variations in the underlying architecture, deployments, trust algorithms, and use cases. The report also offers detailed guidance on managing a ZTA transition, from creating an asset inventory to evaluating vendors.

The NIST’s description of Zero Trust as an approach to security, rather than a rigid set of policies or technologies, has contributed to this report’s longevity. Even so, parts of the report have already become dated. For instance, the report “attempts to be technology agnostic” when choosing between authentication methods such as username/password, one-time code, and device certificates. From today’s vantage point, it’s easy to see that all authentication methods are not created equally. Passwords, for instance, are particularly weak (though it helps if you use an Enterprise Password Manager), and strong authentication is necessary for effective Zero Trust.

The NIST’s report includes a list of potential threats to ZTA, including stolen credentials, a compromised policy administrator (such as an identity provider), and a lack of interoperability between vendors. It also names an underrecognized risk to the integrity of ZTA: “subversion of ZTA decision process.” In the real world, these policies are vulnerable to abuse by admins and executives who make exceptions to security policies – such as by allowing themselves access to resources on personal devices.

The history of Zero Trust is just beginning

While you may be sick of the name “Zero Trust” (especially after reading this article), the ideas at its core aren’t going anywhere. The more the world is dominated by SaaS apps, the less the idea of a network perimeter makes sense. And in a perimeterless world, strong authentication, encryption, and access control are necessities. Still, that’s just the start of how you reduce attack surface and protect systems.

Part of the reason Zero Trust is so popular (and profitable) is because it’s malleable; for better and for worse, there is no single right way to accomplish it. In fact, part of the point of Zero Trust is that you can’t “accomplish” it at all; you can only practice it. And the interaction between Zero Trust theory and the real-world practice of security will continue to push its evolution for years to come.

If you’d like to hear more data privacy and security stories like this, and hear about how to implement Zero Trust, check out our podcast.

by Elaine Atwell on

by Elaine Atwell on