We often hear people say that there is a trade-off between security and convenience. Although there is some truth to that, I want to explain why, more often than not, security actually requires convenience. I should warn you, though, that this is going to be one of my most boastful articles to date.

Users of 1Password will certainly have experienced for themselves that security and convenience go hand in hand. Our design goal has always been to make it easier for people to behave securely, rather than insecurely. We have customers who use 1Password only for the convenience, yet they enjoy real security benefits as a result.

We’ve made this point in several places (it’s something I often say in some of our forum discussions), but it always comes across better if I quote someone else instead of myself. So here is noted security researcher Matt Blaze commenting in Why (special agent) Johnny (still) Can’t Encrypt. He discusses a police radio system which had users frequently talking over unencrypted channels while they thought their communication was secured:

The unintended cleartext problems […] first and foremost remind us that cryptographic usability matters. All the security gained from using well-analyzed ciphers and protocols, or from careful code reviews and conservative implementation practices, is lost if users can’t reliably figure out how to turn the security features on and still get their work done.

This is not news to researchers in the field, or at least it shouldn’t be. It certainly isn’t news to us, because we’ve designed 1Password with this fundamental truth in mind from the beginning. If some security policy or mechanism becomes onerous or confusing to use, the people who it’s designed to protect will circumvent it. If people are forced to use a difficult and confusing system, they are likely to make serious mistakes. At best, a security product should make it easier to get your work done. At worst, it shouldn’t make things prohibitively difficult to complete your tasks.

If researchers have understood this concept for decades, why does the view still persist that there is a trade-off between security and convenience? I have a few theories.

1. Rules instead of reasons

Most people just want to be told that something is secure and how to use it. They aren’t all that interested in how the thing works. You, reading this article or diving into the deeper parts of our documentation, are an exception. I have a pathological desire to explain things, and I love a job that turns my pathology into something good. But there is nothing wrong with the majority of users who have other interests. We need to make sure that our products work for those people who don’t take a great interest in how things work.

This brings us to rules of thumb. We all follow some rules of thumb without understanding the reasons behind them. Life is too short to investigate everything. Sometimes these rules persist when the reason behind them disappears. An example of this is the habit some people still have of underlining for emphasis. No printed document should have underlining in it, but the practice is a holdover from the days of typewriters. Here’s another apocryphal example:

I was having dinner at a friends house and before he put the roast in the oven, he cut about an inch off from each end. I asked him why and he said that it makes the roast taste better. I said that I’d never seen this before, but he said that he learned it from his mother, but never really asked about it. So he called his mother to ask why she always cut off the ends of the roast. She said, “Well, with the tiny oven we had back then, a large roast wouldn’t fit; so I had to cut a bit off.”

Rules, without reasons, can turn out to be wasteful or sometimes actively harmful.

Now time for a true story: Back in the days when banks were issuing credit cards to trees a friend of mine explained what he did with the credit cards he didn’t plan to use. He knew that the cards had to be signed to be valid (it said so right on the card!), so he never signed the ones that he didn’t plan to use. This, of course, made him less secure, because a stolen credit card could then be signed by the thief whose signature would easily match what was on the back of the card. But if you don’t think about the reason behind the rule, then his mistake was very reasonable.

Security systems (well, the good ones anyway) are designed by people who fully understand the reasons behind the rules. The problem is that they try to design things for people like themselves—people who thoroughly understand the reasons. Thus we are left with products that only work well for people who have a deep understanding of the system and its components. The fantastic designers and developers here at AgileBits would fall into the same trap if we didn’t constantly remind ourselves that we want to bring a secure password and information management experience to everyone.

2. Your security is my security

Helping other people be secure is a good thing in and of itself. But there is also a selfish motivation: Your security is my security.

I used to hear people say, “Well I don’t do anything sensitive with my computer, so I’m not worried about its security.” I hear that statement less now that more people are doing on-line banking and shopping, but let me use this example anyway. A compromised home computer that is connected to the network, even if there is no data worth stealing on that computer, gets used for other criminal activity. The computer can be used for sending spam, attacking other systems, or hosting fake pharmacy sites.

A compromised computer joins the arsenal of the bad guys even if they don’t do damage to its the legitimate owner. This means that the cost of letting your computer be compromised is not borne by you, but is distributed over the rest of the net. Because of this distorted incentive system, many people are unwilling to take on the chore of security if they don’t see the benefit.

I think that many security developers have mistakenly assumed that people are willing to pay a substantial price in convenience for security, forgetting that most home users don’t actually feel the consequences of their own insecure practices. I suspect that one reason why the convenience versus security myth has persisted is that the system developers cared so much about security that they assumed that everyone else did too. These assumptions and their resulting obsession over security meant that little or no effort went into looking at usability.

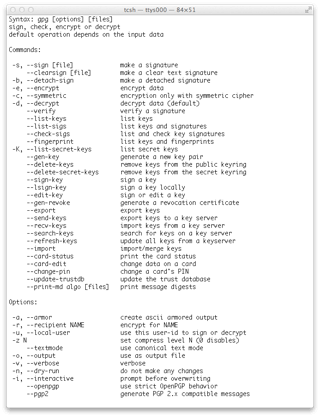

3. Complex options

If you look at the Preferences > Security window in 1Password you will see seven different options.

This is more than we we would like. You will also find that although 1Password is extremely powerful there aren’t boat loads of “Advanced Options” either. For a product that has been under such intensive development (1Password for Mac has been updated over 150 times in its life) you would expect options from “use Blowfish instead of AES” to “store my keys file on an external device” or additional functions such as “manage my Certificate Signing Requests” to “manage my hardware serial numbers”.

To help explain our reluctance to add these seemingly useful features, I’ll quote from an old (2003) article by Niels Ferguson and Bruce Schneier on why IPSec—an internet security technology—never met expectations:

Our main criticism of IPsec is its complexity. IPsec contains too many options and too much flexibility; there are often several ways of doing the same or similar things. This is a typical committee effect. Committees are notorious for adding features, options, and additional flexibility to satisfy various factions within the committee. As we all know, this additional complexity and bloat is seriously detrimental to a normal (functional) standard. However, it has a devastating effect on a security standard.

We will, of course, add features and options when they make things easier and more secure for a large portion of users. But we also resist the temptation toward feature bloat, even when it is “just an advanced option for those who want it”. The thinking that “well it’s just one option that most people can ignore” is fine when it really is just one option, but it never really is just one.

We do look seriously at feature requests; I don’t mean to suggest otherwise. But our concern for usability for everyone is why we tend to be very conservative about adding more options. Never fear, though. There are some great new things in the pipeline that will make 1Password even more useful for everyone. As it has been for more than five years now, 1Password is under active development, and we have some wonderful stuff for you to look forward to.

4. Because the myth is kind of true

Of course there is some truth to the convenience versus security myth. After all, the fact that we have passwords (or other authentication means) at all for websites is an inconvenience. So it would be absurd for me to completely deny the myth.

Most of the trade-offs we face are between security in one respect with security in another. For example, we could store more of 1Password’s indexed information in an unencrypted format (which would slightly speed up some processes) if we didn’t insist on decrypting only the smallest amount of information needed at any one time.

Why Wendy can encrypt

You may have met Wendy Appleseed. She is our sample user if you import our Sample data (Help > Tools > Import Sample Data File). Wendy can get the full benefits of the top notch algorithms and protocols we use because we take her user experience very seriously; we see convenience as part of security. When we are presented with something that appears to be conflict between usability and security, we take that as a challenge. Meeting that challenge is hard work, but we love it.

by Jeffrey Goldberg on

by Jeffrey Goldberg on