There are a lot of technical terms that mean something very specific to cryptographers but often mean something else to everyone else, including security professionals. Years ago I wrote about what it means to say that a cipher is “broken”. Today’s word is “infeasible”.

The news that sparked this lesson is the use of “computationally infeasible” in an announcement by Slack. Slack has announced that their hashed password database had been compromised, and their message was excellent: They clearly described what was available to attackers (usernames, email address, hashed passwords, and possibly phone numbers and contact information users may have added); they offered clear and useful instructions on what users should do (change passwords, enable two-step verification), and described what they have done and what they will be doing. And – most relevant for the technical discussion here – they have told us how the passwords were hashed.

In this case they said:

Slack’s hashing function is bcrypt with a randomly generated salt per-password which makes it computationally infeasible that your password could be recreated from the hashed form.

It is terrific that they chose to use bcyrpt for password hashing. bcrypt is among the three password hashing schemes that we recommend for sites and services that must store hashed passwords. The other two are PBKDF2 and scrypt. But Slack’s use of the term “computationally infeasible” here illustrates that one must be very careful when using cryptographic technical terms.

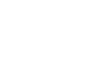

If you have a weak or reused password for Slack, change it immediately. Here is a guide to using 1Password for changing a password. And because the Slack app on iOS makes use of the 1Password App Extension, it is easy to use a strong and unique password for Slack.

If you would like to see how to use Slack’s two-step verification with 1Password take a look at our handy guide on doing just that.

But now back to what is feasible with password hashing.

One way hashing

When services that you log into store your password they should never store those as unencrypted “plaintext”. If they are stored as plaintext it means that anyone who can get their hands on that data file can learn everyone’s passwords. For example, Molly (one of my dogs) uses the same password on Poop Deck as she does on Barkbook. So if Patty (my other dog) learns Molly’s Poop Deck password, she can use it to break into Molly’s Barkbook account as well. This is why it is important not to reuse passwords.

Now suppose that Molly uses the password “rabbit” on Barkbook. (Have I mentioned that Molly is not the smartest dog in the pack?) Barkbook shouldn’t store just “rabbit”, but instead should store a one way hash of rabbit. A cryptographic hash function will transform something like “rabbit” into something like “bQ67vc4yR024FB0j0sAb2WKNbl8=” (base64 encoded).

One of the features of a cryptographic hash function is that it should be quick and easy to compute the hash from the original, but that it should be infeasible to perform the computation in the other direction. That is it should be pretty much impossible to go from “bQ67vc4yR024FB0j0sAb2WKNbl8=” back to “rabbit”. And it is.

Guessing versus reversing

With any halfway decent cryptographic hash function is it infeasible to compute the original from its hash if the original is chosen at random! But if you can make some reasonable guesses about the original then you can use the hash to check your guesses. Because passwords created by humans are not chosen at random, then it does become computationally feasible (and often quite practical) to discover the original based on the hash.

The actual formal definition of “one-way” for a cryptographic hash function, H(x), includes the requirement that x be the output of a uniformly distributed sampling of the domain of H. That is, considering all of the things that you can hash (under some set length), you need to pick something at random. Otherwise a hash function might be invertible. Human created passwords do not meet that requirement and so the “computational infeasibility” of inverting a one way function isn’t applicable when its input is not chosen at random.

So now let’s correct Slack’s statement:

Slack’s hashing function is bcrypt with a randomly generated salt per-password which makes it computationally infeasible that a randomly created password could be recreated from the hashed form.

Modified Slack statement.

This, of course, is why you should use 1Password’s Strong Password Generator for creating your passwords. When your password is chosen randomly with a large set of possibilities, then it really is computationally infeasible to discover the password from the cryptographic hash.

Slowing down guessing

I mentioned that (for now) bcrypt, scrypt, and PBKDF2 are good choices for password hashing. Once the final results are in from the Password Hashing Competition and the dust has settled, we will probably have a good successor to those three. These are built upon cryptographic hash functions, but are designed for hashing specifically for when their input is not selected randomly.

Because cryptographic hashing is something that we have computers do a lot of, one of the things that we want is that it be fast. We want to be able to perform lots and lots of SHA-256 hashes per second without straining a computer’s memory. But if an attacker is going to be guessing passwords to see if they produce the right hash, we want to slow down the hashing. PBKDF2, scrypt, and bcrypt are all designed to require much more computation than a regular hash function to compute a hash. This can slow down an attacker from performing millions of computations per second to just thousands. The actual speed depends on many things, including the hardware that the attacker brings to bear on the system. scrypt, additionally, places specific demands on memory.

So the use of bcrypt means that attackers will need to do more work than they otherwise would to guess passwords stolen from Slack. That is a good thing, but it is not an “infeasible” amount of work.

What’s infeasible?

I started out by saying that I was going to talk about the word “infeasible”, but so far I have just been using it a lot. This is because its definition is abstract, subtle, and hard. I am not going to give a full definition, but I am going to try to get reasonably close. The discussion that follows is inherently technical, and nobody will blame you if instead of reading further you just wish to watch us pour ice water over ourselves. (Remember, that was a thing just last year.)

Welcome back to this article. It get’s progressively more arcane from this point onward.

The notion of infeasible depends on the relationship between the amount of work the defender has to do to secure the system compared to the amount of work that the attacker has to do to break it. A bank vault may take a minute to unlock if you know the combination, but it may take days to break through if you don’t. With cryptographic systems it can take just a fraction of a second to decrypt data if you have a key, but many times the age of the universe to do so if you don’t have the key.

Security parameters

What we want is the amount of work the attacker has to do to be vastly disproportionate to the work that the defender must do. It turns out that this can be stated mathematically, but first we need to introduce the notion of “security parameter” if we want our definition to stand the test of time instead of depending on the speed and power of current computers. So we will talk about how much work the defender and the attacker have to do in proportion to some security parameter.

Let’s pick, for purposes of exposition, an encryption system that operates at a security parameter of 56. The amount of computation that the the defender has to do to decrypt some data with the key is proportional to 56, but the amount of work that the attacker has to do to decrypt the data without the key is proportional to 2⁵⁶. Fifty-six is much much smaller than 2 raised to the 56th power, but today even 2⁵⁶ operations is within the reach of many attackers. Thirty years ago it was within the reach of just a few.

So now let’s suppose that we want to double this security parameter to 112. How much of a work increase might this cause the defender? You might be thinking that it doubles the cost to the defender, but the system I’m thinking of actually tripled the cost to the defender. Tripling the cost for double the security parameter may not seem like a good deal, but doubling the security parameter increased the work of the attacker by another 2⁵⁶, for a total of 2¹¹². This puts it well outside the reach of even the most resourceful attacker for a long time to come.

When we doubled the security parameter in that example, the work to the defender increased linearly while the work to the attacker increased exponentially. We want the work required of the attacker to increase exponentially with the security parameter while for the defender we increase it linearly or polynomially.

Doing time, time, time in an exponential rhyme

If the security parameter is n, we will tolerate it if the amount of work the defender must do is proportional to na for any a > 1. That’s what we mean when we say the work is “polynomial in n“. So if the work goes up with the square or cube of n we might grumble and seek more practical systems, but no matter how big the power that n is raised to gets, this is still a polynomial expression. An algorithm that works this way is called a “polynomial time algorithm”.

For the attacker we want the number of computations needed to be proptional to an expression in which n is in the exponent. So if the work to the attacker is proportional to bⁿ for any b > 1, so that the work is exponential in n. (Those of you who know this stuff, know that I’m leaving some things out and am taking some shortcuts.)

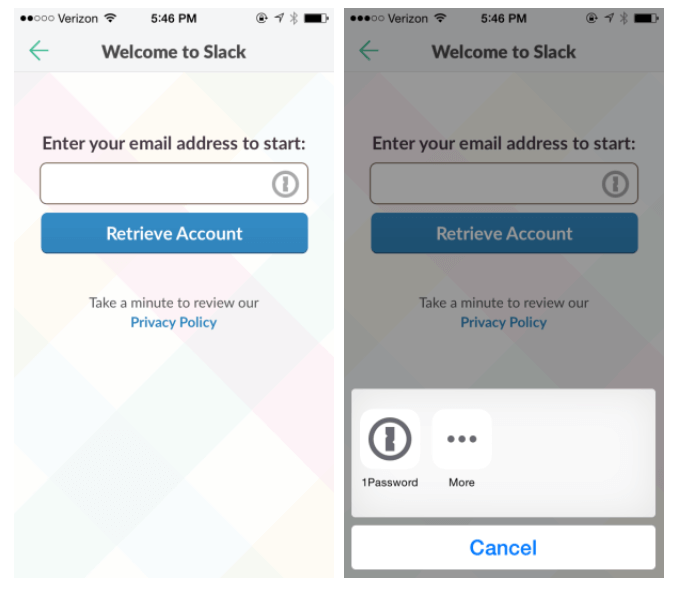

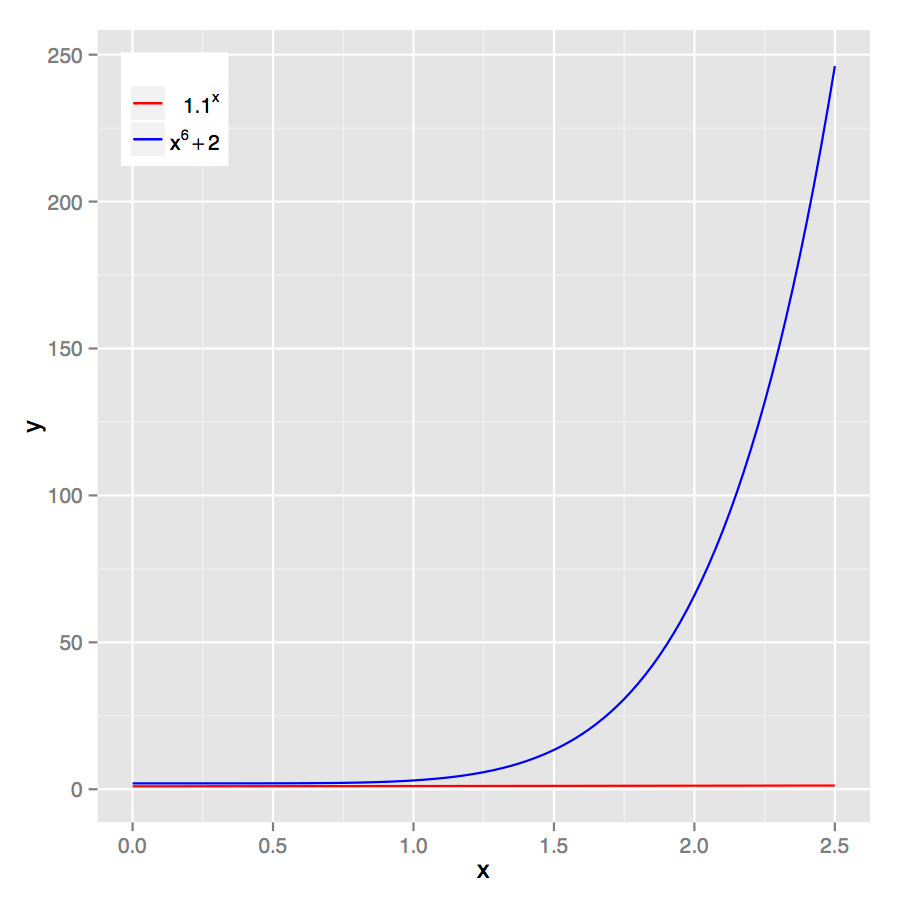

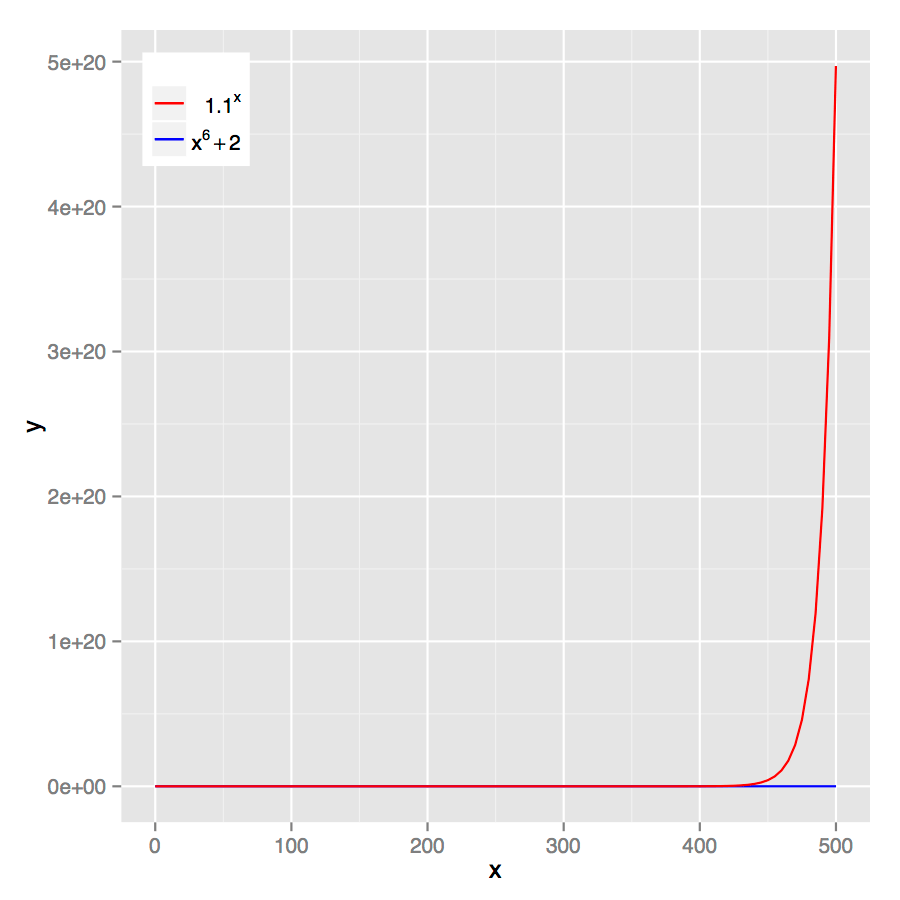

It might seem that a “big” polynomial get us bigger numbers than a “small” exponential, but no matter how much a polynomial function starts out ahead of an exponential, the exponential will always catch up. Let’s compare the exponential y=1.1ˣ with the polynomial y=x⁶ + 2. For values of x below a few hundred, it looks like the polynomial is the runaway winner.

But we inevitably reach a point where the exponential function catches up. For the particularly examples I’ve given, the exponential catches up with the polynomial when x is about 372.73.

Finally, if we go just a bit beyond the point where the exponential overtakes the polynomial, we see that the exponential completely flattens the polynomial.

Some computations will take a number of steps that are polynomial in n (“polynomial time algorithms”), and others will be exponential (“exponential time algorithms”). We say that a task is infeasible if there is no polynomial time algorithm to complete it with a non-negligible chance of success. I have not defined what a non-negligible chance of success is, but as the article appears to be growing in length exponentially, I will leave that discussion for our forums.

When we have this sort of asymmetry, where the work done by the attacker grows exponentially with the security parameter, but grows at most polynomially for the defender, there will always be some security factor beyond which the work to be done by the attacker is so enormously larger than what the defender must do as to just not be an option for any attacker.

Quibbling over terminology

Now that we have a workable definition of “infeasible” and a better understanding of what cryptographic hash functions do, we can take a closer look at Slack’s statement. First let me repeat that their overall statement was excellent, and I fully sympathize with the difficulty involved in writing something about security that is correct, clear, and usable. I’ve taken some shortcuts in my exposition on any number of occasions, and I’ve made my share of errors as well. My point here is not to criticize but instead to use this as an opportunity to explain.

Given what we believe about cryptographic hash functions it is infeasible to discover x if you are only given the hash of x but only if x is chosen at random. Furthermore this is true of any (decent) cryptographic hash function and is not limited to the slow functions that are recommended for password hashing. That is, we don’t need bcrypt or PBKDF2 for that property to hold.

The limits of slow hashes

Slow hashes – specifically designed for password hashing – are built because we know that passwords are not chosen at random and so are subject to guessing attacks. But slow hashes have their limits, and with the notions that have been introduced above, we can now talk about them more clearly. Using a slow hash like PBKDF2 slows things down for both the attacker and for the defender. And the amount of slow-down is roughly the same for both the attacker and for the defender.

If we increase the security parameter (number of iterations) for PBKDF2 the computational cost rises linearly for both the attacker and for the defender. This is unlike the security parameters we use elsewhere in cryptography, where we would like a small (linear or perhaps polynomial) increase in cost to the defender to create a large (exponential) increase for the attacker.

Let’s see how that works out with a concrete, if hypothetical, example. Suppose it is using 40,000 PBKDF2 iterations. Now suppose that you add a really randomly chosen digit to the end of your Master Password. Adding a single random digit will make an attacker do 10 times the amount of work that they would have to do to crack the original password. Adding two digits would make the attacker have to do 100 times the work of the original. Making a password just a little bit longer (with random stuff) makes the work required by the attacker increase exponentially. That is the kind of proportion of work that we like.

Now suppose 1Password uses 40,000 PBKDF2 iterations in deriving your Master Password. To get the same additional work as adding a single digit to your password, you would need to increase the number of PBKDF2 iterations to 400,000. And to get the equivalent of adding two digits, you would need to increase the number of iterations to 4,000,000. Once we have a goodly amount of PBKDF2 iterations, there isn’t that much gained by increasing it by an additional ten or twenty thousand. But there is much to be gained by even a small improvement in a Master Password.

PBKDF2 is terrific, and it is an important part of the defense that 1Password offers if an attacker gets a hold of your encrypted data. But you must still pick a good Master Password because the security parameter is linear for both the defender and the attacker. Unless there is a breakthrough in the slow hashing competition, a strong Master Password will always be required in order to ensure your security can withstand the test of time.

by Jeffrey Goldberg on

by Jeffrey Goldberg on